Compliance as a right

It was discussed above how automating the typical stages of audits simultaneously results from and at the same time, helps to increase the maturity of the process as a whole. Having implemented the appropriate solution, some organizations may think that is the end of the matter – all that is left to do is keep the process running. Tasks are automated, monitoring is underway, and data is organized.

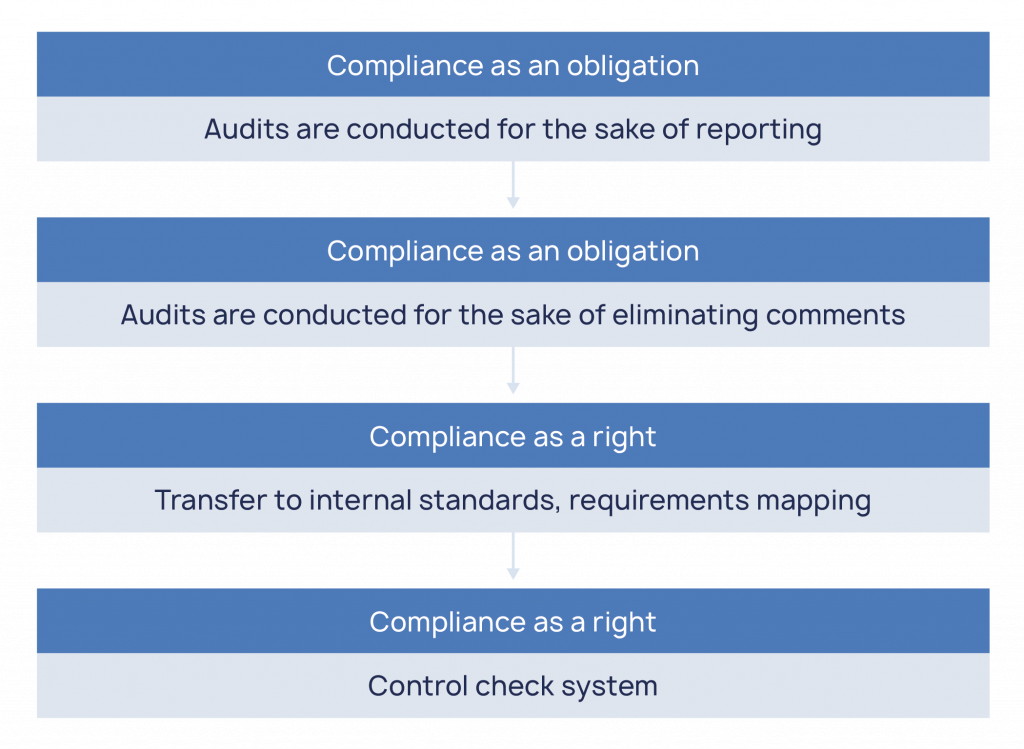

In fact, this is just the beginning. Organizations are moving to the next level of cyber security maturity when they stop doing audits “because the authority forces them to do this” and realize that this tool can be used to proactively respond to problems.

Internal audits can be organized in many different ways but quite often they begin with the fact that, as cyber security employees gain the experience they start to form their own, in-house, standards. As they learn the various regulatory requirements, they feel the need to formulate a metric for themselves that reflects the level of asset compliance without reference to specific external documents.

This is how internal standards and compliance assessment methodologies are born.

1. Internal audits

Internal standards (sets of requirements) reflect the “visual erudition” of the experts. After conducting numerous audits, experts conclude that a large number of legal requirements and best practices overlap in one way or another. Finding requirements that are similar in meaning, the cyber security employees map them into a single generalized requirement. And such requirements make up an internal checklist for verification.

Requirements mapping is not a trivial task, even for experienced experts. It is difficult both from the analytical and organizational points of view. The information, on the basis of which a certain question was formulated, should always be available – and especially during the audits, since it is impossible to create perfect wording, and the auditor may need to refer to the original.

Gradually, the internal standard is becoming the main tool for audits – compliance with it is checked with constant regularity. Methodologists in companies keep the list of issues up to date as the legislation changes. Internal audits help identify potential violations before official audits begin, and they also foster a culture of compliance within the organization. And while in the past reporting could still be considered the final goal of an audit, an internal audit definitively corrects the perception of the true result of an audit.

SGRC solutions in this case make it possible to definitively avoid duplication of actions as well:

– Maintain the regulatory framework under which audits are conducted.

– Generate their questionnaires.

– Perform mapping of similar legal requirements.

– Transfer the results of internal checklist audits to external requirement audits.

The last point can be the most difficult to implement, since the internal questionnaire and the external regulatory documents may use different scales and assessment methodologies, moreover, one internal question may cover several “external” at once, not always evenly. The SGRC solution should not only implement such a transfer but also provide some flexibility in its customization to accommodate the approach of each particular organization.

There is one more step in the development of internal audits. Initially, the process is based on the classical approach – the questionnaire is treated as a whole. The audit begins on a set schedule or trigger and ends when a compliance assessment is issued for all requirements. However, not all responses need to be updated once a month/quarter/year – for example, requirements for having a CS policy should be checked less frequently than requirements for the relevance of antivirus databases. From understanding this nuance, a system of control checks is born – these are all the same questions from the organization’s internal standard but checked asynchronously. Each control check has its schedule and responsible staff – thanks to this, employee resources are switched to audits only as needed.

Thus, the organization is gradually forming an approach in which various aspects of CS are assessed regularly but not redundantly. At the same time, by the time the “official” audits are completed, most of the requirements already have up-to-date information, which is automatically transferred to the appropriate fields. Subsequently, the organization’s CS metrics systems begin to form on such systems of control audits.

2. Compliance level calculation

An equally important part of the development of audit processes is the formation of an approach to calculating the final compliance level of assets with requirements. A compliance level is an indicator (quantitative or qualitative) that allows the comparison of audit outcomes across assets and one asset over different periods. Such indicators are used to monitor changes in the state of CS and evaluate the effectiveness of the comments elimination process.

At present, there is no unified approach to calculating the level of compliance in the field of cyber security. There are best practices, as well as methods prescribed in the regulatory documents but the proposed approaches are very different. This is influenced by many factors:

– Which assets are considered target assets within audits? Information systems, business processes, organizations as a whole?

– What is considered a compliance level? The maturity level of CS processes? The percentage of compliance of an individual asset?

– etc.

As a result, many organizations begin to formulate calculation methodologies on their own. Often this happens spontaneously – at first, there is a need to somehow summarize the results of filling out the questionnaire but as the level of process maturity increases, the interpretation of the results also becomes more complicated.

Usually organizations go through the following steps on the way to finding the ideal approach to calculations for themselves:

– Qualitative assessment of compliance with individual requirements – based on the organization’s adopted scale.

– Complementing the scale with quantitative intervals.

– Calculating the average of the assessments at the whole questionnaire level or calculating the percentage of compliance with the document.

– Creating a qualitative scale to reflect the final level of compliance.

– Use of weighting coefficients to show the importance of particular requirements.

– Allocation of calculations at the requirements group level – tracking compliance in the context of individual CS domains.

– Transition to more detailed calculations that take into account the results of assessments by groups and subgroups of requirements, weight coefficients, and sometimes the presence of open issues.

The use of the SGRC solution allows employees to experiment with finding a convenient algorithm for calculating the final compliance index, due to the product’s inherent flexibility in setting up mathematical calculations and taking into account the user-established hierarchy of requirements.

3. Typical remarks

Another aspect, which can be mentioned within the framework of the topic of CS process maturity, is the adoption of so-called “typical remarks”.

It was discussed earlier how the accumulated experience of experts can significantly change the approach to conducting audits. The same applies to the issue management process.

As experts gain experience, they begin to encounter the following challenges:

– Large amounts of historical data on violations found. Classification by type/category does not provide sufficient detail for their analysis.

– A large percentage of similar formulations. Small differences in phrasing significantly increase the time to analyze even relevant lists of comments.

– Auditor’s “blurred eye” – overlooking seemingly obvious violations in an effort to find an interesting case.

– Wasting time in generating a description of the issue – even if the wording is standard, the expert should make an effort to write it down / find it in historical data and copy it.

An attempt to solve the above problems leads the experts to compile a list of typical issues. A typical issue is the wording of some standard violation, which is the most likely to be found if a specific requirement is not met. So, for example, for the requirement “Antivirus tools must be regularly updated” a typical issue would be “Antivirus tools are not regularly updated”. The more expertise is accumulated, the more cases it will be possible to cover.

What are the advantages of using such lists?

– Ease of compiling statistics and tracking the most frequent issues, since the standard wording is the ultimate reference. This can provide an additional vector in the development of the CS system.

– Fast elimination and fixing of the most obvious variants, because the typical list is the expert’s support in the assessment of the requirement.

– Auditors spend less time forming the obvious part of the description and can focus on filling in other details and forming a list of evidence.

– Reduced risk of human error.

If the SGRC solution is used, it will be a handy tool to maintain such directories, map violations with requirements, as well as automate the use of templates, and allow visualization of the accumulated statistics with the help of graphs.

Thus, due to SGRC systems use, audits can become a powerful tool for cyber security monitoring. The information accumulated by other solutions becomes contextual, is put together in a single picture, and allows to understand the further vector of development. But it is worth realizing that their implementation alone will not be able to solve existing problems. Maturity always implies a willingness to change processes rather than automate ineffective practices.